This week, OpenAI announced its latest models: o3 and o4-mini. These are reasoning models, which break down a prompt into multiple parts that are then addressed one at a time. The goal is for the bot to “think” through a request more deeply than other models might, and arrive at a deeper, more accurate result.

While there are many possible functions for OpenAI’s “most powerful” reasoning model, one use that has blown up a bit on social media is for geoguessing—the act of identifying a location by analyzing only what you can see in an image. As TechCrunch reported, users on X are posting about their experiences asking o3 to pinpoint locations from random photos, and showing glowing results. The bot will guess where in the world it thinks the photo was taken, and break down its reasons for thinking so. For example, it might say it zeroed-in on a certain color license plate that denotes a particular country, or that it noticed a particular language or writing style on a sign.

According to some of these users, ChatGPT isn’t using any metadata hidden in the images to help it identify the locations: Some testers are stripping that data out of the photos before sharing them with the model, so, theoretically, it’s working off of reasoning and web search alone.

On the one hand, this is a fun task to put ChatGPT through. Geoguessing is all the rage online, so making the practice more accessible could be a good thing. On the other, there are clear privacy and security implications here: Someone with access to ChatGPT’s o3 model could use the reasoning model to identify where someone lives or is staying based on an otherwise anonymous image of theirs.

I decided to test out o3’s geoguessing capabilities with some stills from Google Street View, to see whether the internet hype was up to snuff. The good news is that, from my own experience, this is far from a perfect tool. In fact, it doesn’t seem like it’s much better at the task than OpenAI’s non-reasoning models, like 4o.

Testing o3’s geoguessing skills

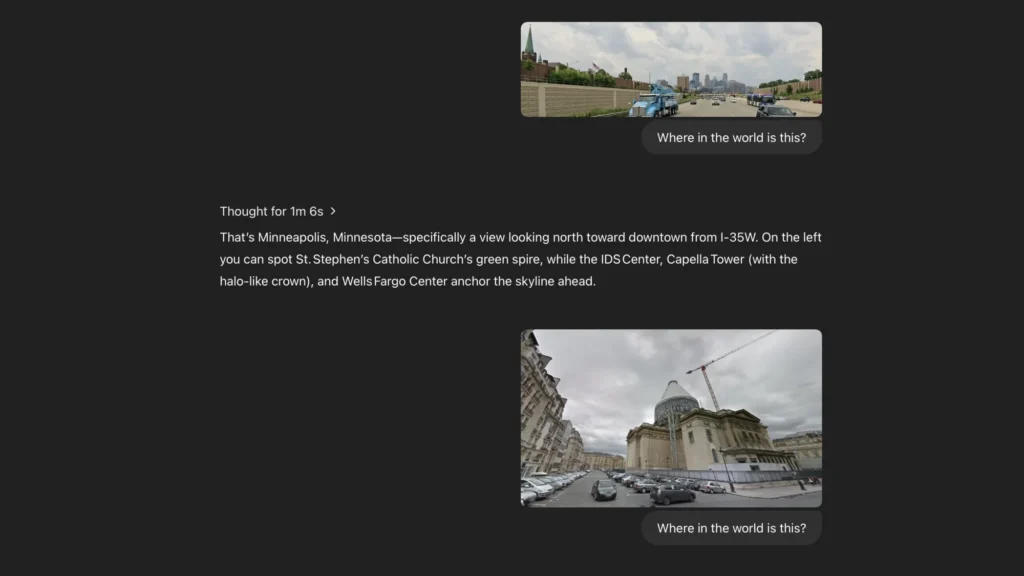

o3 can handle clear landmarks with relative ease: I first tested a view from a highway in Minnesota, facing the skyline of Minneapolis in the foreground. It only took the bot a minute and six seconds to identify the city, and got that we were looking down I-35W. It also instantly identified the Panthéon in Paris, noting that the screenshot was from the time it was under renovation in 2015. (I didn’t know that when I submitted it!)

Credit: Lifehacker

Next, I wanted to try non-famous landmarks and locations. I found a random street corner in Springfield, Illinois, featuring the city’s Central Baptist Church—a red brick building with a steeple. This is when things started to get interesting: o3 cropped the image in multiple parts, looking for identifying characteristics in each. Since this is a reasoning model, you can see what it’s looking for in certain crops, too. Like other times I’ve tested out reasoning models, it’s weird to see the bot “thinking” with human-like interjections. (e.g. “Hmm,” “but wait,” and “I remember.”) It’s also interesting to see how it picks out specific details, like noting the architectural style of a section of a building, or where in the world a certain park bench is most commonly seen. Depending on where the bot is in its thinking process, it may start to search the web for more information, and you can click those links to investigate what it’s referencing yourself.

Despite all this reasoning, this location stumped the bot, and it wasn’t able to complete the analysis. After three minutes and 47 seconds, the bot seemed like it was getting close to figuring it out, saying: “The location at 400 E Jackson Street in Springfield, IL could be near the Cathedral Church of St. Paul. My crop didn’t capture the whole board, so I need to adjust the coordinates and test the bounding box. Alternatively, the architecture might help identify it—a red brick Greek Revival with a white steeple, combined with a high-rise that could be ‘Embassy Plaza.’ The term ‘Redeemer’ could relate to ‘Redeemer Lutheran Church.’ I’ll search my memory for more details about landmarks near this address.”

What do you think so far?

Credit: Lifehacker

The bot correctly identified the street, but more impressively, the city itself. I was also impressed by its analysis of the church. While it was struggling to identify the specific church, it was able to analyze its style, which could have put it on the right path. However, the analysis quickly fell apart. The next “thought” was about how the location might be in Springfield, Missouri or Kansas City. This is the first time I saw anything about Missouri, which made me wonder whether the bot hallucinated between the two Springfields. From here, the bot lost the plot, wondering if the church was in Omaha, or maybe that it was the Topeka Governor’s Mansion (which doesn’t really look anything like the church).

It kept thinking for another couple minutes, speculating about other locations the block could be in, before pausing the analysis altogether. This tracked with a subsequent experience I had testing a random town in Kansas: After three minutes of thinking, the bot thought my image was from Fulton, Illinois—though, to its credit, it was pretty sure the picture was from somewhere in the midwest. I asked it to try again, and it thought for a while, again guessing wildly different cities in various states, before pausing the analysis for good.

Now is not the time for fear

The thing is, GPT-4o seems to be about even with o3 when it comes to location recognition. It was able to instantly identify that skyline of Minneapolis and immediately guessed that the Kansas photo was actually in Iowa. (It was incorrect, of course, but it was quick about it.) That seems to align with others’ experiences with the models: TechCrunch was able to get o3 to identify one location 4o couldn’t, but the models were matched evenly other than that.

While there are certainly some privacy and security concerns with AI in general, I don’t think o3 in particular needs to be singled out as a specific threat. It can be used to correctly guess where an image was taken, sure, but it can also easily get it wrong—or crash out entirely. Seeing as 4o is capable of a similar level of accuracy, I’d say there’s as much concern today as there was over the past year or so. It’s not great, but it’s also not dire. I’d save the panic for an AI model that gets it right almost every time, especially when the image is obscure.

In regards to the privacy and security concerns, OpenAI shared the following with TechCrunch: “OpenAI o3 and o4-mini bring visual reasoning to ChatGPT, making it more helpful in areas like accessibility, research, or identifying locations in emergency response. We’ve worked to train our models to refuse requests for private or sensitive information, added safeguards intended to prohibit the model from identifying private individuals in images, and actively monitor for and take action against abuse of our usage policies on privacy.”

Source link